PANN realizes advantages of ANN predicted by science.

The Progressive Artificial Neural Network incorporates recent discoveries in neuroscience. It operates similarly to a natural neural network. The benefits of this similarity open the path to competitive opportunities for our customers.

PANN’s novel architecture and training algorithm resolve many of the problems in existing ANNs. It can run on various devices: CPUs, GPUs, programmable microchips, memristor chips, optical microprocessors, etc., while improving and expanding their operational capabilities.

PANN realizes ANN advantages predicted by science. It enables:

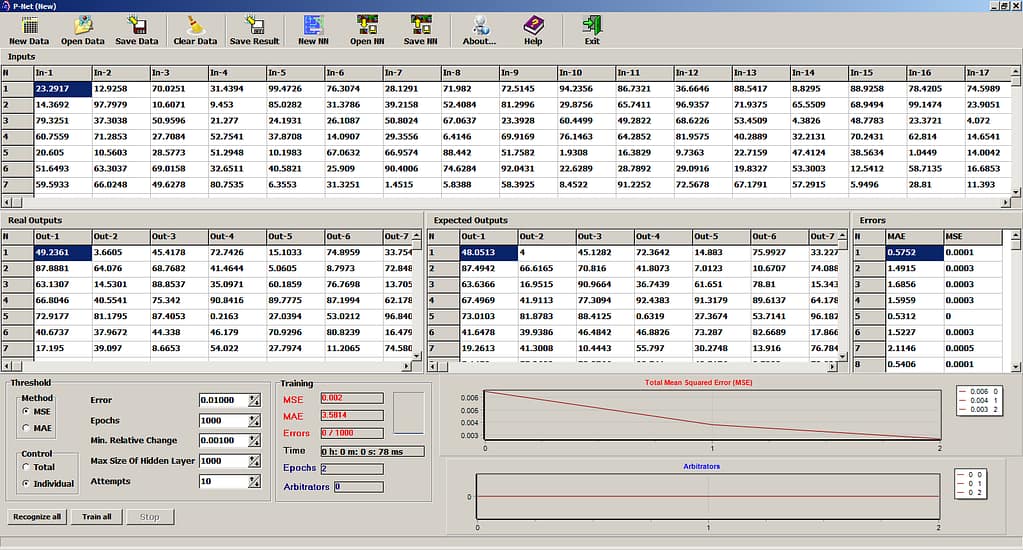

- High speed training with high accuracy for approximation, classification and interpolation.

- Training on CPU is at least 3 000 times faster in comparison with existing ANN

- Training with one GPU is 200 000 times faster and proportional to the number of GPUs

- 100%-parallel computation; linear increase in training speed with additional GPUs.

- Batch training of the entire training set: weight correction after every epoch of images, not after every image. Recognition of the entire batch of images, not of a single image, one after another.

- Ability to train the network with additional images without retraining the entire network.

- It is reliable and resistant against network paralysis.

PANN can be implemented in both digital and analog forms, which creates additional advantages.

PANN software

PANN allows to create a really smart software with high processing speed and unlimited amount of processed information. In addition:

- PANN is scalable, can be built in any size.

- PANN architecture and training algorithm have been discovered within biological analogs.

- Complex calculations are not necessary.

- Multiple repeating calculations are not necessary.

- Calculations and weight correction are performed using matrix algebra. (US Patent 9,390,373)

PANN can replace existing ANN and be used in development of the new advanced software for:

- Big and super big data;

- Fast performance;

- Processing unindexed data;

- Data protection;

- High reliability of the system;

- Solving unsolved problems.

PANN on Graphical Process Units

PANN is extremely fast on Graphical Process Units (GPU). This makes it possible to create a Supercomputer based on both GPUs and PANN. Market of GPUs supercomputers is already about $18B.

Market is ready for this:

- Many supercomputer development companies use GPUs for parallel calculations, thus increasing operational speed. For example, Cray, IBM, Dell, and HPE.

- Some companies use GPU for ANN speed increasing. NVidia, for example, uses GPU to increase ANN speed 20+ times in comparison with CPU.

What makes all this possible. PANN and its key features

PANN new features include a distributor and a host of weights at each synapse.

US Patent 9,390,373

Input signals activate proper weights on a synapse. Distributor selects one or more weights depending on input signal magnitude and the information on selected weight value is sent to neuron.

As a result, PANN weight correction, i.e., training, is accomplished with a single-step operation of error compensation during the retrograde signal. This takes into account only the information received by the neuron from its synapses during training.

Training PANN with the next image does not depend on its training with the previous image. Complete compensation of training error is provided for each image used in training. Thus, calculations and weight correction may be performed using matrix algebra while complex and repeating calculations are not necessary.

In its basic variant, PANN provides correction of all weights that contribute to the error on a particular neuron; correction is provided by using the same value for all active weights. It has no activation function (or has a linear activation function), which drastically simplifies and reduces calculations.

These advantages enable a thousand-fold increase in PANN training speed.

Discover Progress patents